10 Powerful eCommerce Data Collection Strategies & Examples

Data collection is vital for data aggregators to provide accurate, actionable insights. Leveraging methods like web scraping, APIs, and automation ensures high-quality, real-time data. Overcoming challenges, such as privacy compliance and data integration, is key to supporting informed business decisions.

Table of Contents

In today’s data-driven world, data collection for data aggregators plays a vital role in providing businesses with actionable insights. Data aggregators rely on efficient data-gathering processes to accumulate, filter and structure vast amounts of information from multiple sources.

For data aggregators, the ability to consolidate and manage diverse data sources is essential to delivering valuable insights. Effective data collection ensures that the gathered information is accurate, comprehensive and actionable.

Whether through traditional methods or modern techniques like web scraping and data extraction, mastering these approaches is critical to maintaining high data quality. However, the vast flow of information also presents challenges, such as ensuring privacy compliance, effective data cleansing and seamless integration. Partnering with the right data collection company can help aggregators overcome these obstacles while optimizing data-gathering processes.

This data collection guide offers a comprehensive look at the role of data collection, outlining its numerous benefits, the different types of data collection, and the best practices needed to ensure both accuracy and relevance.

Data collection plays a pivotal role in driving data aggregation and enabling data-driven decisions. It supports the accurate and efficient consolidation of diverse datasets, whether sourced through web scraping, APIs or manual inputs. The success of any aggregation process depends on the precision and timeliness of the data collected, as inaccurate or outdated data can distort business outcomes and analytics.

High-quality, up-to-date data fuel the aggregation pipeline, ensuring that the consolidated information is both actionable and reliable. This is essential for critical decision-making processes, such as market forecasting, competitive analysis, and performance tracking. Additionally, the automation of data collection allows for real-time updates and significantly reduces manual effort, enabling data aggregators to process large volumes of data faster and with greater accuracy.

By maintaining a streamlined and well-structured data collection framework, aggregators can overcome common challenges, such as data duplication and inconsistency, ensuring smoother integration and enhanced decision support.

In short, the role of data collection is indispensable in ensuring that aggregated datasets are robust and capable of supporting informed business strategies.

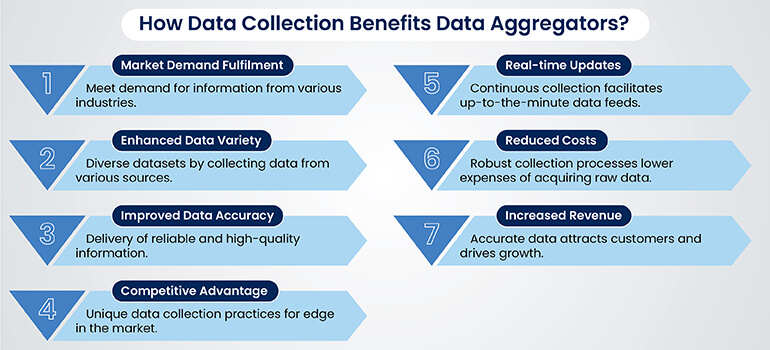

Data collection fuels the success of data aggregators by providing valuable resources for their business model. Data aggregators rely on efficient and comprehensive data collection processes to gain a competitive edge. Here are the key data collection benefits that support their operations:

To effectively gather data from various sources, businesses and data aggregators use extraction methods like web scraping, APIs, manual extraction, and optical character recognition (OCR). Each technique has distinct advantages based on the type of data (structured, unstructured), scale (small-scale or large-scale), and the desired accuracy or speed of collection. For example, web scraping is ideal for large datasets from websites, while APIs offer real-time, structured data extraction. Below is an overview of key data extraction methods and the tools used to maximize efficiency and accuracy.

Web scraping is an automated method for efficiently extracting large volumes of data from websites, often used by data aggregators to collect information such as product prices, reviews and competitor analysis. Common techniques include HTML parsing, DOM manipulation, XPath and CSS selectors to target specific data elements on web pages.

Popular tools for web scraping include BeautifulSoup for smaller tasks, Scrapy for large-scale operations, and Selenium for scraping dynamic JavaScript content. For example, an e-commerce aggregator can use web scraping to gather real-time product data from multiple retailers for price comparisons and market analysis.

Ethical web scraping involves following best practices, such as respecting the robots.txt file, avoiding sensitive data, using request delays to prevent server overload, and ensuring compliance with regulations.

Application Programming Interfaces (APIs) provide a standardized way to access data from various online platforms and services. They enable data aggregators to collect structured and real-time data efficiently. One of the key advantages of using APIs for data collection is the ability to retrieve consistent, up-to-date information directly from a reliable source, thus reducing the need for manual data extraction.

Interacting with APIs involves a few key steps: authenticating access, sending requests (such as GET or POST) and handling responses, which are usually in formats like JSON or XML. Popular APIs for data collection include the Twitter API for social media data, Google Maps API for location data and the Facebook Graph API for social insights.

APIs streamline the data collection process, making it faster and more reliable, especially when working with large datasets from multiple platforms.

Surveys and questionnaires are effective tools for collecting primary data directly from target audiences. They allow businesses to gather insights into customer preferences, behaviors and opinions. To ensure accurate results, it’s crucial to design survey questions that are clear, concise and unbiased, allowing respondents to provide honest and valuable feedback.

Surveys can be distributed through various methods, including online platforms, email, phone calls or in-person interactions, depending on the audience and research goals. Tools such as SurveyMonkey, Google Forms, and Typeform make it easy to create, distribute and manage surveys, helping streamline the data collection process and improve response rates.

When used effectively, surveys and questionnaires provide valuable insights that guide decision making and strategy development.

Social media monitoring is a powerful method for collecting valuable data from platforms like Twitter, Facebook, and Instagram. Businesses use this data to track conversations, analyze sentiments, and identify emerging trends, offering deep insights into customer behavior and market dynamics.

Tools like Brandwatch, Hootsuite, and Sprout Social help automate the tracking of relevant keywords, hashtags and mentions, enabling real-time analysis of public sentiment. However, social media data collection comes with challenges, such as handling large volumes of information, filtering out noise and navigating privacy concerns.

Despite these challenges, social media monitoring remains an essential tool for businesses seeking to understand audience perspectives and adapt their strategies accordingly.

Sensor data collection is rapidly growing due to the proliferation of IoT devices and wearables, which capture real-time data across various industries. These devices provide critical insights for applications such as health tracking, smart homes, and industrial monitoring, enabling more informed decisions and automation.

Collecting sensor data involves handling various data formats, such as JSON or CSV, and requires efficient storage solutions to manage the high volumes generated. Processing these data for actionable insights involves real-time analysis, often through cloud platforms, ensuring timely responses to the environment being monitored.

As IoT adoption increases, sensor data becomes a vital source for enhancing operational efficiency and improving user experiences.

Implement latest data collection techniques for efficient data aggregation.

Data aggregators collect a wide range of data from various industries, each with its own unique data types and sources. Below are examples of key industries and the types of data collected:

Real Estate:

Real estate aggregators gather property listings, pricing trends, and neighborhood data.

Data sources include MLS databases, real estate websites and public records. Web scraping and APIs are commonly used to compile comprehensive datasets. Partnering with MLS data extraction experts can ensure accurate, up-to-date information from multiple listing services, thereby enhancing the overall quality of the data.

Healthcare:

Healthcare aggregators collect patient records, treatment outcomes, and health trends.

Sources include hospital databases, wearable devices and health-related apps. Sensor data from IoT devices and surveys are frequently employed to gather real-time health information.

E-commerce:

E-commerce aggregators focus on data such as product prices, customer reviews, and sales trends.

Sources include retailer websites, customer feedback platforms, and social media. Web scraping, APIs and social media monitoring tools are key to collecting and analyzing this data.

Travel and Hospitality:

Travel and hospitality aggregators collect data such as flight and hotel availability, pricing, customer reviews, and seasonal

trends.Data sources include booking websites, travel portals, and customer feedback platforms. APIs and web scraping are essential methods for real-time data collection in this fast-paced industry.

Job Portals:

Job aggregators collect job postings, salary information, and applicant data from company websites and job boards.

Sources include job portals, company career pages and professional networking platforms. Web scraping and APIs are frequently used to gather and update this data in real time.

Each industry relies on specific data sources and collection methods tailored to its needs, ensuring data accuracy and relevance for better decision making.

To ensure reliable and efficient data collection, data aggregators should follow these key best practices.

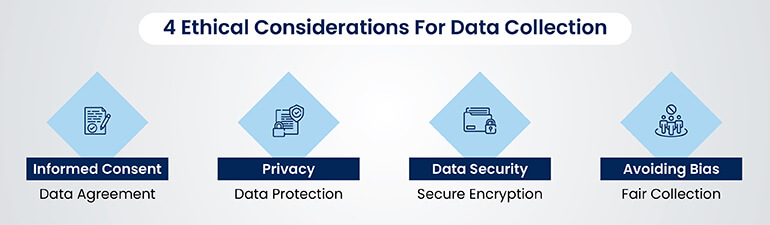

When collecting data, it’s essential to address key ethical considerations to ensure compliance and protect individuals’ rights.

Key ethical considerations for data collection include:

A US-based B2B data-selling company partnered with Hitech BPO to gather comprehensive and accurate information on both current and former attorney members registered with the California Bar Council. This project aimed to compile data on attorneys practicing in California, distinguishing itself by its scale and complexity.

We gathered 309K attorney profiles in 45 days to establish a comprehensive and precise data repository.

Read full case study »A real estate periodic publisher faced limitations with their subscription database, hampering mailing list creation and customer outreach. To expand their reach and enhance targeted marketing, they partnered with Hitech BPO:

28k prospective client records from six counties for marketing campaigns boosted the circulation of real estate periodicals.

Read full case study »A Tennessee property data solutions provider faced challenges in collecting accurate and up-to-date property information from various sources, including diverse document types and online platforms across multiple states and counties. To streamline data aggregation, maintain quality, and ensure currency, the company partnered with Hitech BPO for efficient data management and collection.

Operational profitability increases with 40+ million real estate records aggregated annually.

Read full case study »A California-based video communication software company with 700,000 business customers sought to maximize revenue by upselling and cross-selling their solutions. To achieve this, they partnered with HitechBPO to enhance their CRM database. This involved collecting and refining customer data and social media information to improve customer profiles, enabling more effective marketing and sales efforts.

We enriched 2500+ customer profiles every day for the video communication company.

Read full case study »To maintain high data quality, aggregators implement several key processes:

These practices help data aggregators ensure that the information they provide is accurate, consistent and ready for actionable insights.

Data aggregators face several challenges when collecting and managing vast amounts of data. Below are some of the most common data collection challenges they encounter.

When choosing a data collection partner, it’s essential to evaluate several factors to ensure they meet your business needs. Below are key considerations:

As data collection evolves, emerging technologies like AI, IoT, 5G, blockchain, edge computing, and quantum computing are reshaping the landscape. These innovations enhance the speed, accuracy and scale of data-gathering processes. AI and machine learning enable smarter data collection through automation and predictive analytics, while IoT and 5G provide real-time data streams from connected devices.

Continuous improvement in data collection methods is crucial, as businesses seek more efficient ways to gather and process vast amounts of data. Moreover, the growing significance of data privacy is leading to more stringent regulations and a requirement for ethical, transparent practices. Ensuring compliance with privacy laws and adopting privacy-focused technologies will be essential as the data ecosystem expands.

In the dynamic world of data aggregation, having a well-structured data collection strategy is vital for success. This guide has highlighted the importance of utilizing diverse data sources, adopting advanced tools and ensuring that data is accurate and relevant. For data aggregators, data quality, adherence to ethical standards, and compliance with privacy regulations are important.

Further, as technology evolves, continuous improvement in data collection methods—through innovations like AI, IoT, and real-time data streams—will be essential to staying competitive. Ultimately, strong data collection processes empower aggregators to provide deeper insights and better serve their clients.

What’s next? Message us a brief description of your project.

Our experts will review and get back to you within one business day with free consultation for successful implementation.

Disclaimer:

HitechDigital Solutions LLP and Hitech BPO will never ask for money or commission to offer jobs or projects. In the event you are contacted by any person with job offer in our companies, please reach out to us at info@hitechbpo.com

Leave a Reply