How Human Data Annotations Shape Generative AI

Generative AI supports creative workflows, but its success relies on high-quality training data. Human annotators play a crucial role in ensuring accuracy, ethical considerations, and nuanced outputs, shaping the future of generative AI.

Table of Contents

Generative AI has emerged as a transformative force in technology, with its ability to support code, content, and communication workflows. It is rapidly being adopted across diverse industries, including e-commerce, real estate, healthcare, gaming, entertainment, marketing, and drug discovery, with opportunities to redefine the boundaries of creativity and innovation.

By 2030, activities that account for up to 30 percent of the hours currently worked across the US economy could be automated – a trend accelerated by generative AI.

However, the success of generative AI hinges critically on the quality and accuracy of the training datasets used to train AI and ML models. This makes human data annotation indispensable. Human annotators provide the labeled data that fuels the learning process of generative AI models, enabling them to generate realistic, relevant, ethical, and human-like outputs.

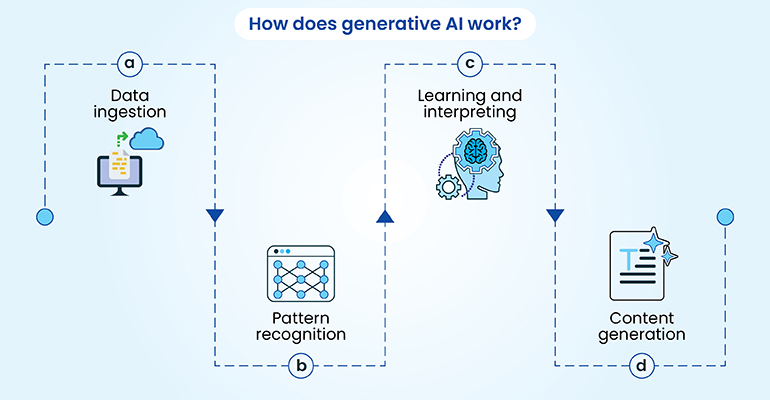

Generative AI refers is a class of artificial intelligence algorithms that learn from input training data to generate new data with similar characteristics. Instead of simply classifying or recognizing existing data, generative AI creates novel content, pushing the boundaries of what machines can produce.

There are various types of generative models, including Generative Adversarial Networks (GANs), Variational Autoencoders (VAEs), and diffusion models, each with their own strengths and applications.

Generative AI is being applied across a wide range of fields, from creating realistic images and generating human-like text to composing music and even designing new drugs.

The key to building successful generative AI models lies in the availability of massive and diverse high-quality training datasets that are accurately labeled to expose the underlying patterns that help generate meaningful outputs. A recent report by McKinsey estimates that generative AI could add the equivalent of $2.6 trillion to $4.4 trillion annually across the 63 use cases they analyzed. This highlights the immense potential of generative AI and its reliance on robust data annotation.

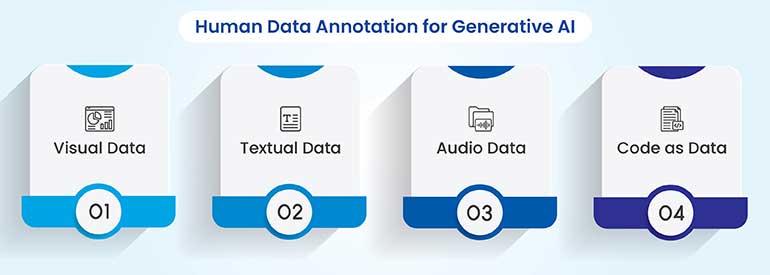

Generative AI models, particularly Generative Pre-trained Transformers (GPT), are trained on vast datasets comprising unstructured and semi-structured data, including text, images, audio, and video. Each data type requires distinct annotation:

Want to transform your AI models with high-quality annotations.

Human data annotation is not just a technical necessity but a strategic advantage for generative AI development. It ensures contextual accuracy, enhances data quality and infuses a layer of cognitive understanding essential for creating sophisticated AI systems. Here’s how it helps:

Human data annotation serves as the foundation for generative AI by providing the “ground truth” that models rely on to learn effectively. High-quality annotations enable models to identify patterns, relationships, and contexts within data, which is critical for generating coherent and meaningful outputs.

For instance, in natural language processing (NLP), annotations, such as entity tagging or sentiment labeling, help models understand linguistic nuances and context in AI applications. Without accurate annotations, generative models may produce biased or irrelevant results, undermining their utility.

The quality of data annotations directly impacts model performance. Also, consistent annotations across datasets ensure the model’s robustness and adaptability to real-world scenarios.

The unique interpretative abilities of human annotators are indispensable for generative AI. Machines lack the nuanced understanding of the context, tone, and cultural subtleties that humans inherently possess.

For instance, annotators can identify implicit meanings or emotional undertones in text or imagery, enabling models to generate content that aligns with human expectations.

Human judgment is crucial for addressing ethical concerns and reducing errors in annotations. Recent advancements show that incorporating human reviews reduces annotation errors by 25%, thereby improving model reliability.

Domain-specific expertise enhances this process; experts in fields like medicine or finance ensure that annotations meet specialized requirements, boosting the contextual accuracy of AI systems.

AI-assisted labeling helps bridge the gap between manual and automated data labeling, delivering efficiency gains and improving the overall quality of labeled data. The future of data annotation lies in a synergistic collaboration between humans and AI.

These tools are emerging as powerful allies for human annotators, offering a range of capabilities to enhance efficiency and accuracy. These tools can prelabel data, suggest potential labels, and even automate repetitive annotation tasks. This not only accelerates the annotation process but also helps reduce human error and improve consistency.

For instance, studies have shown that integrating AI assistance can reduce the time required for data annotation by up to 60%. This underscores the transformative potential of human–AI collaboration for data annotation. However, it’s crucial to incorporate human feedback for the continuous refinement of these AI algorithms.

The human element remains crucial in guiding and refining AI assistance. Human feedback on AI-generated labels or suggestions helps to improve the accuracy and effectiveness of these tools. This iterative feedback loop allows AI algorithms to learn from human expertise and adapt to specific annotation requirements.

Human annotators can identify errors, provide nuanced interpretations and offer valuable insights that help improve the accuracy and effectiveness of AI assistance.

However, there are Ethical Considerations regarding AI assistance in data annotation. Concerns about job displacement arise as automation increases, necessitating a balance between efficiency and human oversight. Companies must ensure that ethical guidelines are followed to prevent bias and to maintain fairness in AI systems.

Thus, while AI tools improve annotation processes, human involvement remains vital for ethical and effective outcomes.

Better annotations for smarter AI, unlock the potential of generative AI.

So, as we saw, human data annotation plays a crucial role in shaping the capabilities of generative AI models across various domains. Here are some key types of human data annotation tasks that fuel the development of generative AI:

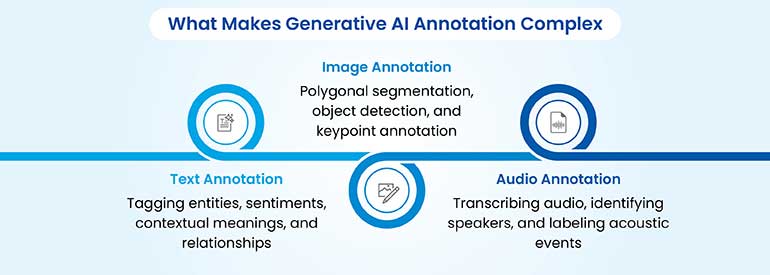

Image annotation is essential for training generative models that create realistic images and videos. This involves tasks like object detection, where annotators identify and label objects within images. Image segmentation further refines this by classifying each pixel in an image, enabling more precise object generation.

Image captioning adds another layer by providing textual descriptions of images, helping generative models understand the relationship between visual content and language.

Text annotation is crucial for training generative models that produce human-like text. Sentiment analysis involves labeling text with its emotional tone, enabling AI to generate text with specific sentiments. Named entity recognition identifies and classifies named entities in text, such as people, organizations, and locations, improving the accuracy and coherence of the generated text.

Text summarization involves creating concise summaries of longer texts, which helps generative models learn to generate concise and informative content.

Audio annotation is essential for training generative models that produce realistic speech and sounds. Speech transcription involves converting spoken language into written text, enabling AI to generate speech from text.

By labeling audio segments with the corresponding speaker, speaker identification improves generative models’ capacity for generating diverse voices. Sound classification categorizes different sounds, allowing AI to generate specific sound effects or music.

Code annotation is crucial for training generative models that can write and understand code. Code documentation involves adding comments and explanations to code and helping AI models learn coding conventions and best practices.

Code summarization creates concise summaries of code functionality, enabling AI to generate code that meets specific requirements. Bug detection involves identifying errors in code, which helps generative models learn to generate error-free codes.

Human data annotation is essential for training generative AI models across various data types. By accurately labeling images, text, audio, and code, human annotators enable AI to generate realistic and meaningful content, shaping the future of creative AI applications.

Optimizing the annotation process is crucial for efficient and high-quality data annotation, especially in large-scale generative AI projects. This involves streamlining workflows, effectively managing resources and ensuring consistency across annotation teams.

Leveraging the right tools and technologies can significantly enhance the efficiency of the annotation process. Annotation platforms like Labelbox and Prodigy provide intuitive interfaces, project management features, and quality control mechanisms to streamline workflows.

AI-powered pre-labeling tools can automate initial annotation tasks, such as object detection or text tagging, allowing human annotators to focus on more complex and nuanced aspects. These tools not only accelerate the annotation process but also improve accuracy and consistency.

For large-scale generative AI projects, effective resource allocation and management are essential. This involves carefully managing annotation teams, assigning tasks based on expertise and tracking progress to ensure timely completion. Clear communication channels and collaboration tools are crucial for coordinating efforts and addressing challenges.

Project management methodologies, such as Agile or Kanban, can be adopted to ensure efficient task management and progress tracking.

Consistency in annotation is vital for training reliable generative AI models. Standardized procedures, clear guidelines, and regular feedback mechanisms are essential for maintaining consistency across annotation teams. Calibration exercises, where annotators discuss and align challenging cases, can further improve inter-annotator agreement.

Regular quality checks and audits can identify and address inconsistencies, ensuring high-quality annotations for training robust generative AI models.

Optimizing the data annotation process involves leveraging efficient tools, managing resources effectively, and ensuring consistency across teams. By streamlining workflows and maintaining high-quality annotations, we can accelerate the development of robust and reliable generative AI models.

Human data annotation plays a critical role in shaping the ethical development and deployment of generative AI. Annotators are responsible for identifying and mitigating biases that can creep into training data, ensuring that AI models are fair, accountable, and transparent.

Bias can manifest in various forms, such as confirmation bias, where annotators favor information that confirms their existing beliefs. To mitigate bias, it’s essential to have diverse annotation teams with varied backgrounds and perspectives. This diversity helps to identify and address potential biases that may be ingrained in individual viewpoints.

Techniques like blind annotation, where annotators are unaware of the context or purpose of the data, can also help reduce bias. Furthermore, AI-powered bias detection tools can be employed to identify and flag potential biases in annotated data, prompting further review and correction.

Human annotators play a vital role in ensuring ethical AI training. They can identify and flag sensitive or potentially harmful content, ensuring that generative AI models are not trained on data that could perpetuate stereotypes or discrimination.

Annotators can also contribute to the development of ethical guidelines and best practices for data annotation, promoting fairness and accountability in AI applications. By actively participating in these processes, human annotators contribute to the development and deployment of generative AI technologies.

By addressing bias and ethical considerations, human annotators contribute to the development of fair, responsible and trustworthy generative AI.

Human data annotation remains an indispensable force in shaping the present and future of generative AI. Human touch brings accuracy, nuance, and ethical considerations to the forefront of AI development. As we move forward, collaboration between human expertise and AI assistance will be crucial in unlocking further advancements and applications of generative AI.

By embracing this partnership, we can ensure that generative AI technologies are developed responsibly and contribute to a better future for all. Human annotators, with their ability to understand context, resolve ambiguities, and provide ethical guidance, will continue to play a vital role in shaping the generative AI landscape. Through their efforts, we can harness the full potential of generative AI to drive innovation, creativity, and positive impacts across various domains.

What’s next? Message us a brief description of your project.

Our experts will review and get back to you within one business day with free consultation for successful implementation.

Disclaimer:

HitechDigital Solutions LLP and Hitech BPO will never ask for money or commission to offer jobs or projects. In the event you are contacted by any person with job offer in our companies, please reach out to us at info@hitechbpo.com