How Human Data Annotations Shape Generative AI

With direct and authorized access to structured pricing data, APIs eliminate the need for tedious web scraping from ecommerce sites. Credible and authentic insights help businesses shape better pricing strategies to attract and retain customers.

Employing dynamic pricing to influence consumer purchase decisions and optimize revenue is a necessity. However, formulating a perfect pricing strategy through eCommerce web scraping is a challenge. Price scraping requires formulating a perfect pricing strategy, covering aspects like identifying target sources, compliance with legal issues, data accuracy, integration, and so on. Inaccurate price scraping data leads to poor pricing strategy, loss of competitive advantage, and even reputation damage.

API scraping for eCommerce dynamic price scraping is a complex but efficient and reliable method. Authorized access, structured data, targeted data retrieval, data accuracy, and integration capabilities are some of the advantages of using API. Different methods to extract pricing data like web scraping, crawlers, bots, scripting, etc. are much in use. But they all have their challenges. Considering all the pros and cons, API comes out as the best way to extract data.

The highly competitive eCommerce market viz-a-viz the benefits of price scraping has created the necessity to build a price scraping strategy. A dynamic price-scraping mechanism offers multiple benefits. Some of the benefits include:

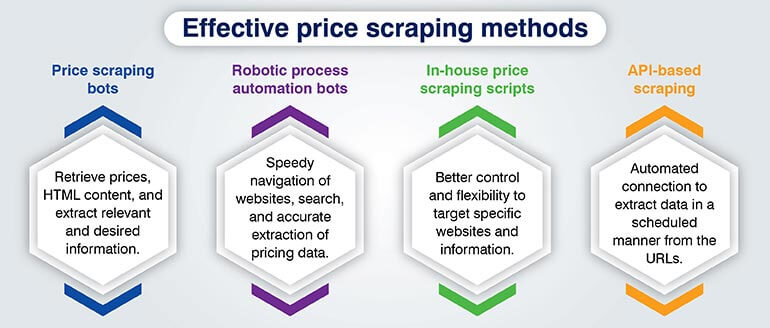

There are various methods of price scraping that any Ecommerce platform can employ. Some of them include:

These web scraping bots are designed and programmed to retrieve pricing and other relevant data from multiple websites. They operate by sending HTTP requests to target websites, retrieving the HTML content, and extracting the desired information.

These bots can be customized as per the business need; they can scrape multiple websites simultaneously and even perform targeted scraping. They can be programmed to run at fixed intervals or daily basis for constant availability of the dynamic pricing data. The automation of the gathering process saves time and effort in facilitating data gathering from multiple locations.

However, one needs to be cautious of some limitations of this system. While using scraping bots you may violate the legal terms of the concerned website if you don’t have proper authorization. Secondly, if the target website’s structure keeps changing, the bot can get you inaccurate data, unless you constantly monitor and adjust the data quality. And finally, you have to be careful to not get blocked as websites often employ anti-scraping to prevent unauthorized scraping.

RPA (Robotic Process Automation) bots, help automate rule-based processes and repetitive tasks. They are programmed to navigate through websites, search for pricing data, and extract in a structured format with speed and accuracy. They are particularly useful in dynamic markets with the capability to run 24/7 ensuring continuous data collection. With the ability to handle large volumes of data and integrate with other systems, they can transfer the extracted data to other platforms and spreadsheets.

Just like price-scraping bots, RPA bots face challenges like legal and ethical issues and compatibility with website changes requiring constant monitoring and updates. Organizations using RPA bots will also need to invest in technical expertise to design, build and maintain the bots. They may also require additional tools to scrape dynamically generated content.

These are custom-developed scripts built by an in-house team to perform price scraping tasks. In-house-built scripts give you better control and flexibility to design as per your business need. You can target specific websites and specific information. To stay compatible with changing website structures or data formats the script can be easily adapted by making relevant changes in the script. Apart from saving on cost you also have full control of data with adequate security. And most important, data can be easily integrated with your internal systems.

Investing in resources and technical expertise is one of the main challenges of in-house price-scraping scripts. It will require time and effort to train your team and keep them updated on the changing technologies. Your team should have a complete understanding of scraping techniques, anti-scraping mechanisms, testing scripts for accuracy, knowledge of languages, and so on. Since the target website keeps changing its structure, your team needs to keep the script updated all the time for data accuracy. You should stay prepared to invest in training and resources.

A web scraping API (Application Programming Interfaces) is a tool that builds an automated connection between the requestor and the website that extracts data from the URLs. The request can be scheduled and specific content can be pulled on demand.

API is a faster process as it allows you to fetch only specific required data that is presented in a structured manner. This reduces unnecessary transfers, improving overall performance. The data delivered in machine learning format ensures higher accuracy; possible error caused by changes in website structure is nullified. Easy integration options with other systems, authorization, and legal compliance are some of the benefits of using API over other web scraping systems. You don’t have the fear of being detected as malicious or blocked.

Compared to web scraping API is a good option but the website must have a dedicated API to be able to use this option. If the website does not support API, you have no option but to use web scraping. Moreover, it may happen that the data required by you may not be accessible by API. Some APIs also have usage limits and even restrictions on the frequency of data extraction.

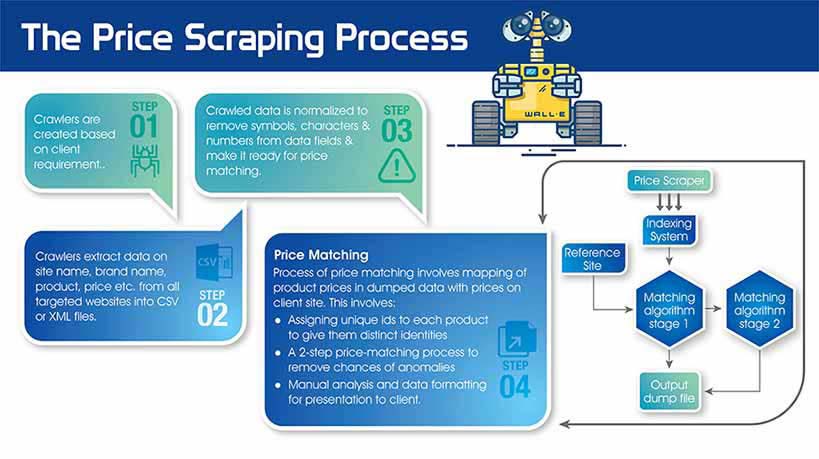

Price scraping is a technique used to extract pricing information from websites. It involves automated web crawling and data extraction to gather prices of products offered by ecommerce players and online retailers.

Price scraping utilizes bots or web crawlers to visit targeted websites, navigate to the relevant product pages, and extract the pricing details. These bots can simulate human browsing behavior to gather accurate pricing data. The extracted data is then analyzed, aggregated, and used for various purposes, such as market research, price comparison, or dynamic pricing strategies. It’s important to perform price scraping with expert help of experienced service providers.

Only API-based scraping is equipped to address the challenges of:

APIs can support interface changes through versioning and backward compatibility mechanisms. The versioning option allows them to introduce modifications in their APIs. With the help of backward compatibility with older versions, existing users can continue to use the old version while new users can use the modified new version.

While introducing any interface changes or when any feature is deprecated API providers intimate the developers well in advance, giving them sufficient time to update their code. This helps in an easy transition to new versions. Deprecation and Sunset policies give clarity on the timeline for discontinuing deprecated features. Elaborate documentation on modifications assists developers to fix bugs allowing for smooth operations.

APIs issue authentication tokens or API keys to developers to access their APIs. These keys identify the requester and even monitor their usage preventing any unauthorized access. Any request from suspicious agents or automated scraping bots is identified and blocked. Use of CAPTCHAs (Completely Automated Public Turing tests to tell Computers and Humans Apart) and other monitoring tools and filtering mechanisms are used to prevent unauthorized entry. The terms of service also clearly explain legal consequences in case of any unauthorized access. And as newer techniques are developed for unauthorized scraping, APIs also keep evolving and updating their security measures.

APIs do not prevent cloaking, but they take measures to prevent unauthorized or malicious use of their application. Cloaking is a practice in SEO where different versions of content are displayed depending on the visitor, and it is considered black hat SEO. Tools like Crawl Phish can be used to automatically detect and categorize client-side cloaking techniques used by known phishing websites.

Use code libraries and programming languages to interact with APIs and extract data from other websites: Look for popular programming languages and code libraries that can help you interact with APIs and scrape price data. Languages like Python, JavaScript, PHP, Ruby, and Java are some examples. Based on your specific requirement, resources, and available tools you can zero down on the most suitable library for your project.

Automated retrieval of structured and organized data: To retrieve data from a source start by identifying the API and understanding its documentation. Acquaint yourself with the data structure, guidelines, and limitations listed by the target website. Follow the authentication process specified by the API provider to authenticate your requests and send the request to the API server. Receive the responses and parse them to extract the relevant data. Automate the system and schedule the frequency based on your business need. You may use the scheduling tools provided by your operating system. And finally, always be in line with the terms and legal requirements of the API.

Reduced need for complex parsing or scraping logic: Using APIs for data retrieval reduces the need for complex parsing or scraping logic as API provides structured data in a standardized format. Compared to web scraping where data is retrieved in HTML format API offers more organized data.

Consider API limitations: APIs are a great alternative to web scraping offering multiple benefits but make sure you acquaint yourself with all the limitations like rate limits, data limits, parallel request limitations, data chunking, usage limits, service availability, and so on. API controls the number of requests you can make within a specified time or the amount of data you can retrieve per request or even the number of concurrent or parallel requests you can make. Make sure you check on all the guidelines of the given API and accordingly plan your project so that you work within the limits specified by the API.

The future of API-based price scraping in the eCommerce industry looks promising, with several trends and developments shaping this path. With a large number of Ecommerce platforms recognizing the value of APIs for price scraping and other data extraction, the scope and usage of APIs are expected to increase. Platforms are gearing to provide APIs specifically designed for price data extraction.

With APIs getting advanced Businesses can look forward to accessing accurate and real-time pricing data straight from relevant sources. Improved APIs will lead to better quality and reliable data driving businesses to make informed decisions and be more competitive.

APIs moving towards standardization and common data formats will further simplify the data integration issues with multiple E-commerce platforms. This would help different platforms extract pricing information more easily from across multiple platforms reducing the complexities of multiple website structures.

Security concerns will also be addressed as stricter regulations will evolve and people will have to adhere to the API policies to prevent any legal hassles.

It’s important to note that the future of API-based price scraping will be shaped by technological advancements, market dynamics, legal and regulatory factors, and the strategies adopted by eCommerce platforms and API providers. Adapting to these changes and staying informed about industry trends will be key to effectively leveraging API-based price scraping in the eCommerce industry.

Price scraping has become an integral part of selling products in the eCommerce market. For success in this highly competitive eCommerce market, this is imperative. Even if you don’t do it, your competitors are already doing it and gaining that extra advantage. You either use web scraping or APIs to extract pricing data. Both methods have their advantages and disadvantages. Based on your requirements you can opt for any method or use a combination of API and web scraping.

Though APIs are generally limited in their functionality they provide data in structured form with high accuracy. API-based price scraping offers a more efficient, reliable, and authorized approach to extracting pricing data in the eCommerce industry.

What’s next? Message us a brief description of your project.

Our experts will review and get back to you within one business day with free consultation for successful implementation.

Disclaimer:

HitechDigital Solutions LLP and Hitech BPO will never ask for money or commission to offer jobs or projects. In the event you are contacted by any person with job offer in our companies, please reach out to us at info@hitechbpo.com